#PYTHON OPERATOR AIRFLOW CODE#

the range of data for an API call) and also make your code idempotent (each intermediary file is named for the data range it contains).

It expects a taskid and a pythoncallable function. Pay attention to the arguments of the BranchPythonOperator. Copy paste the code in that file and execute the command docker-compose up -d in the folder docker-airflow.

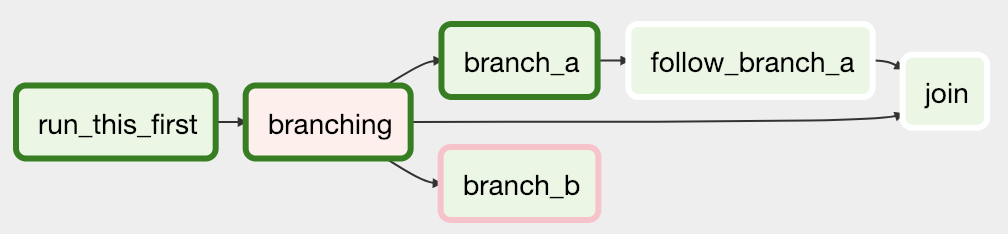

Create a file branching.py in the folder airflow-data/dags. Templates can be used to determine runtime parameters (e.g. Airflow 2.0, not 1.10.14 Clone the repo, go into it. there are some cases we can't deepcopy the objects (e.g protobuf). Dict will unroll to xcom values with keys as keys. It will look for a key formatted similarly to my_s3_bucket/20190711/my_file.csv, with the timestamp dependent on when the file ran. Please use the following instead: unrolled to multiple XCom values. In the s3_key parameter, Jinja template notation is used to pass in the execution date for this DAG Run formatted as a string with no dashes ( ds_nodash - a predefined macro in Airflow). This also uses another concept - macros and templates. Notice it has two Airflow connections in the parameters, one for Redshift and one for S3. This is in a family of operators called Transfer Operators - operators designed to move data from one system (S3) to another (Redshift). The S3ToRedshiftOperator operator loads data from S3 to Redshift via Redshift's COPY command. Since we didn't change the Airflow config this should be the for you too. By default, Airflow looks at the directory /airflow/dags to search for DAGs.

You are using a bitshift operator to do that, >, meaning that t1 runs first and t2 runs second. Different types of operators exist, and you can create your custom operator if necessary. Then it’s time to define the task dependencies. Each of the tasks is implemented with an operator. from airflow import DAG from import BashOperator from import PythonOperator from . It is defined as a python script that represents the DAG’s structure (tasks and their dependencies) as code. I can use the parameter into bash operator, but I can’t find any reference to use them as python function. Redshift_conn_id = 'my_redshift_connection' , I want to pass parameters into airflow DAG and use them in python function.

0 kommentar(er)

0 kommentar(er)